Not All Explorations Are Equal: Harnessing Heterogeneous Profiling Cost for Efficient MLaaS Training

Abstract

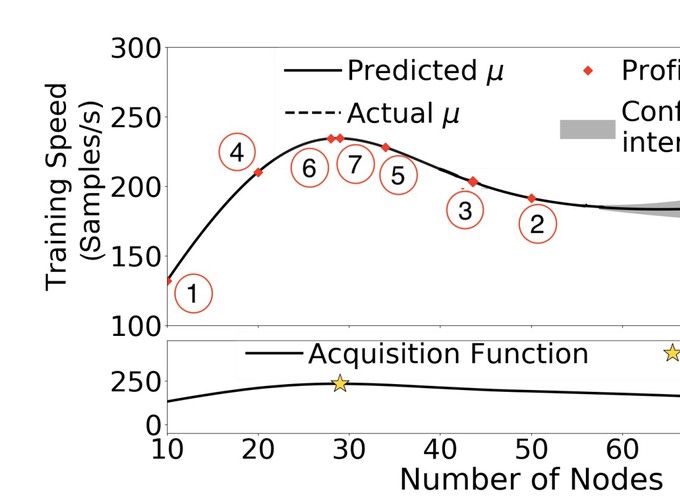

Machine-Learning-as-a-Service (MLaaS) enables practitioners and AI service providers to train and deploy ML models in the cloud using diverse and scalable compute resources. A common problem for MLaaS users is to choose from a variety of training deployment options, notably scale-up (using more capable instances) and scale-out (using more instances), subject to the budget limits and/or time constraints. State-of-theart (SOTA) approaches employ analytical modeling for finding the optimal deployment strategy. However, they have limited applicability as they must be tailored to specific ML model architectures, training framework, and hardware. To quickly adapt to the fast evolving design of ML models and hardware infrastructure, we propose a new Bayesian Optimization (BO) based method HeterBO for exploring the optimal deployment of training jobs. Unlike the existing BO approaches for general applications, we consider the heterogeneous exploration cost and machine learning specific prior to significantly improve the search efficiency. This paper culminates in a fully automated MLaaS training Cloud Deployment system (MLCD) driven by the highly efficient HeterBO search method. We have extensively evaluated MLCD in AWS EC2, and the experimental results show that MLCD outperforms two SOTA baselines, conventional BO and CherryPick, by 3.1X and 2.34X, respectively.